Summary

Overview

This report presents the findings of a content analysis for items posted on X (formerly Twitter) between October 7, 2023, and October 20, 2023, collected on October 20. The three datasets focused on different topics: Israel-Hamas, Ukraine, and vaccines. Each dataset had keywords and a filter to exclude tweets below a certain threshold. For more information about the definitions and filters, see the Appendix.

The dataset can be found on the Harvard Dataverse. For a simpler version of this report, see the brief.

Key Findings

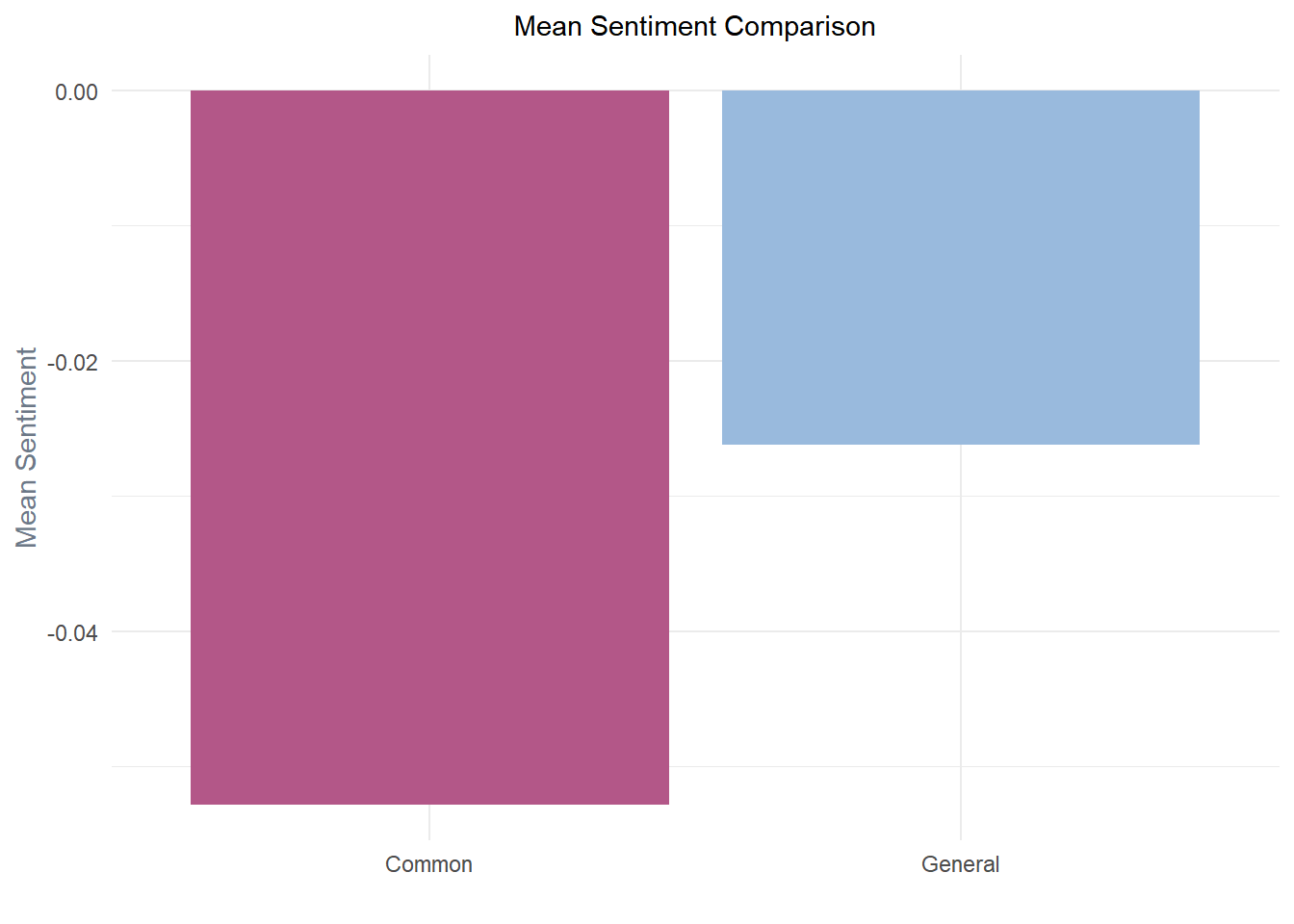

Tweets from accounts in all three datasets–henceforth known as trio-authors–appear significantly more negative in sentiment when compared to the General data. The difference between the high-performance tweet groups’ sentiment scores and the General sample was statistically significant (p-value = 8.912e-05).

General), authors with one high-performance tweet (In-One), authors with high-performance tweets on two topics (In-Two), and accounts that had high-performance tweets in all three datasets (Common).

This aligns with past research findings that negative words and sentiments increase click-through rates and that partisans similarly use negative emotions to increase engagement with followers. The trio-authors include 37 accounts that posted 579 high-performance tweets.

In addition to posting more negative content on average, some trio-authors selectively presented facts, used emotionally charged language, promoted us-versus-them or good-versus-evil framing, encouraged distrust or grievance and sometimes shared misleading or entirely false information.

With the platform owner’s stated “[wish] to become an accurate source of information,” these findings suggest the current approach has failed. Many of these accounts shared blatantly false information related to Ukraine, Israel, Hamas, vaccines, or have in the past. This demonstrates that these falsehoods reach millions of affected people at critical moments.

The engagement received by certain figures was unsurprising, like that of Israel’s official account in the wake of an attack by Hamas. Other accounts with no apparent connection, experience, or informed insight into the situation sometimes exceeded the engagement and impressions of credible media outlets and relevant leaders. This highlights a vulnerability that could easily be weaponized to threaten public health and national security.

Impressions and Engagement for Authors in All Three Datasets

A more in-depth discussion of narratives and themes from this report can be found in the section Narratives and Themes. Broadly, many claims did not fall on a true-false spectrum but instead leveraged the situations to advance a worldview or ideological issue.

Tweet (2,568,593 impressions): “In the past six hours, I’ve seen more war footage out of Israel than in the entirety of the”war” in Ukraine. Why is that?“

Tweet (1,634,256 impressions): “But why do you need an AR-15 with 30 rounds?!” Because the world just watched Hamas go door-to-door slaughtering unarmed Israelis. The government won’t always be there to protect you so you must protect yourself.

Misleading and even blatantly false content appeared in every dataset, which is noteworthy given that the data collection excluded tweets below a certain threshold. The exclusion means that we know that many users saw or engaged with these posts.

False Claims

Here are some examples of false claims.

Tweet (1,124,170 impressions): “Health Canada has confirmed the presence of a Simian Virus 40 (SV40) DNA sequence in the Pfizer COVID-19 vaccine, which the manufacturer had not previously disclosed” “The polyomavirus Simian Virus 40, an oncogenic DNA virus, was previously removed from polio vaccines due to concerns about a link to cancer.

Tweet (2,368,519 impressions): Mayo Clinic quietly updates website to say Hydroxychloroquine can be used to treat Covid patients Doctors were fired and censored for saying this Media smeared it All because Big Pharma couldn’t have any therapeutic drugs available in order to make billions from vaccine EUA

Tweet (1,726,823 impressions): Trudeau regime puts Canadian detective on trial for investigating link between infant deaths and mRNA vaccines

In one example from the Israel-Hamas dataset, an influential user posted:

BREAKING: Israeli Commander Nimrod Aloni has been captured by Hamas in the ongoing war. Analyst: “This is big. Israel is meant to be a big military force. This shows the extent to which they are on the back foot and not ready to respond to this attack. This also shows the extent of the Hamas operation”

Nimrod Aloni is the commander of the Depth Corps

AP later refuted the claim after it spread widely, and recent footage of Aloni has been shared by the IDF on X. While the IDF-affiliated account received a little over 25,000 impressions. The post received over 18,000,000. The AP fact check came two days after the post on X. The post remains live as of October 23, 2023.

Account Engagement

| Author | Total Engagements | Total Impressions |

|---|---|---|

| jacksonhinklle | 19218252 | 679973893 |

| CensoredMen | 4925487 | 369476565 |

| Israel | 3526237 | 459500344 |

| MuhammadSmiry | 2594957 | 68324877 |

| POTUS | 2537765 | 340652078 |

| Lowkey0nline | 2512884 | 179758327 |

| IDF | 2428147 | 181347814 |

| SaeedDiCaprio | 2330893 | 108699755 |

| DrLoupis | 2214116 | 94858821 |

| Timesofgaza | 2138036 | 157367086 |

| omarsuleiman504 | 1924753 | 72284909 |

| AlanRMacLeod | 1429264 | 61762418 |

| elonmusk | 1394980 | 195852974 |

| sahouraxo | 1356829 | 42989307 |

| visegrad24 | 1296797 | 166124915 |

| CollinRugg | 1231792 | 185504815 |

| benshapiro | 1221677 | 113066349 |

| m7mdkurd | 1057071 | 41099224 |

| spectatorindex | 1021016 | 126048849 |

| PopBase | 938309 | 273961685 |

| netanyahu | 936738 | 71270721 |

| TheMossadIL | 936563 | 40986864 |

| narendramodi | 918653 | 62142798 |

| HoyPalestina | 915786 | 34707055 |

| Benzema | 874857 | 49135364 |

| Author | Engagements | Impressions |

|---|---|---|

| jacksonhinklle | 3531000 | 127852680 |

| ZelenskyyUa | 1196890 | 65930171 |

| DrLoupis | 820074 | 47488485 |

| DefenceU | 607144 | 25701067 |

| PicturesFoIder | 518568 | 116221609 |

| Gerashchenko_en | 504582 | 26163713 |

| visegrad24 | 466111 | 27457934 |

| JoeyMannarinoUS | 417685 | 17728778 |

| WallStreetSilv | 331054 | 66026305 |

| KyivIndependent | 328912 | 23100337 |

| Byoussef | 327366 | 21190268 |

| IAPonomarenko | 298860 | 30457140 |

| GuntherEagleman | 287998 | 5264082 |

| JayinKyiv | 283543 | 12823311 |

| KimDotcom | 243281 | 20518243 |

| PopBase | 241346 | 13360457 |

| reshetz | 238600 | 6248712 |

| Partisangirl | 235884 | 7204375 |

| RealScottRitter | 233739 | 6935045 |

| bayraktar_1love | 209168 | 24171150 |

| Maks_NAFO_FELLA | 198582 | 6716324 |

| WarClandestine | 198556 | 10394227 |

| AlanRMacLeod | 185633 | 5997330 |

| RepMTG | 172240 | 6050145 |

| MarinaMedvin | 170372 | 11632313 |

| Author | Engagements | Impressions |

|---|---|---|

| DiedSuddenly_ | 1514695 | 66681704 |

| VigilantFox | 546347 | 27888914 |

| iluminatibot | 492601 | 21401507 |

| LeadingReport | 385857 | 16254116 |

| Resist_05 | 376731 | 27704353 |

| TheChiefNerd | 353561 | 42440280 |

| robinmonotti | 284548 | 14285553 |

| vivekagnihotri | 280024 | 5100486 |

| P_McCulloughMD | 269386 | 8772643 |

| DC_Draino | 269093 | 22988051 |

| kevinnbass | 265839 | 12723592 |

| DrLoupis | 251139 | 9625041 |

| PeterSweden7 | 215835 | 5230555 |

| WallStreetSilv | 209764 | 34754914 |

| CollinRugg | 182827 | 25632320 |

| Inversionism | 166138 | 8052192 |

| MattWallace888 | 163056 | 15187004 |

| MakisMD | 161204 | 8534635 |

| TexasLindsay_ | 155928 | 8892411 |

| RWMaloneMD | 140618 | 4190681 |

| NobelPrize | 139554 | 20671549 |

| liz_churchill10 | 136745 | 2508441 |

| goddeketal | 136117 | 8191162 |

| elonmusk | 127301 | 5722954 |

| Travis_in_Flint | 125725 | 7751633 |

One of the most striking findings in this analysis was how significantly a single user could outperform the most relevant voices. Although the account owner has worked in national grassroots campaigns, there’s no apparent personal connection, education, or work history related to the topics they now dominate.

Despite this, this individual outperformed the heads of state in the Israel-Hamas and Ukraine datasets, posting 292 high-performance tweets. In total, they garnered 22.7 million engagements and 807.8 million total impressions.

President Volodymyr Zelenskyy of Ukraine received 1.1 million engagements over the two weeks, while this account received over 3x the number of engagements related to Ukraine.

Exceptional tweets from this user were not limited to the topic of Ukraine. They posted more tweets receiving 20,000 or more likes than the Israeli state, the Israel Defense Forces, and the President of the United States combined.

The account owner has a history of promoting and appearing on Russian state media, spreading misleading claims about events, and casting doubt on specific incidents.

In April 2022, the account owner raised questions about the Bucha Massacre:

“How did forces kill 410 civilians in Bucha, Ukraine after they had completely left Bucha, Ukraine days earlier?” – April 4, 2022

“When it comes to the Bucha massacre, facts are not the friends of the Ukrainian narrative.” – April 6, 2022

The situation captured in the Israel-Hamas dataset is particularly concerning. Even as the country was dealing with a lethal attack, posts from this account overshadowed community leaders, people affected by the situation, credible sources of information, and relevant experts.

Sentiment Analysis

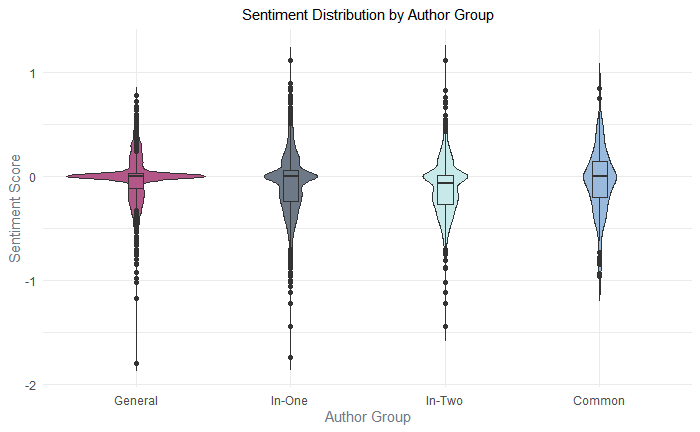

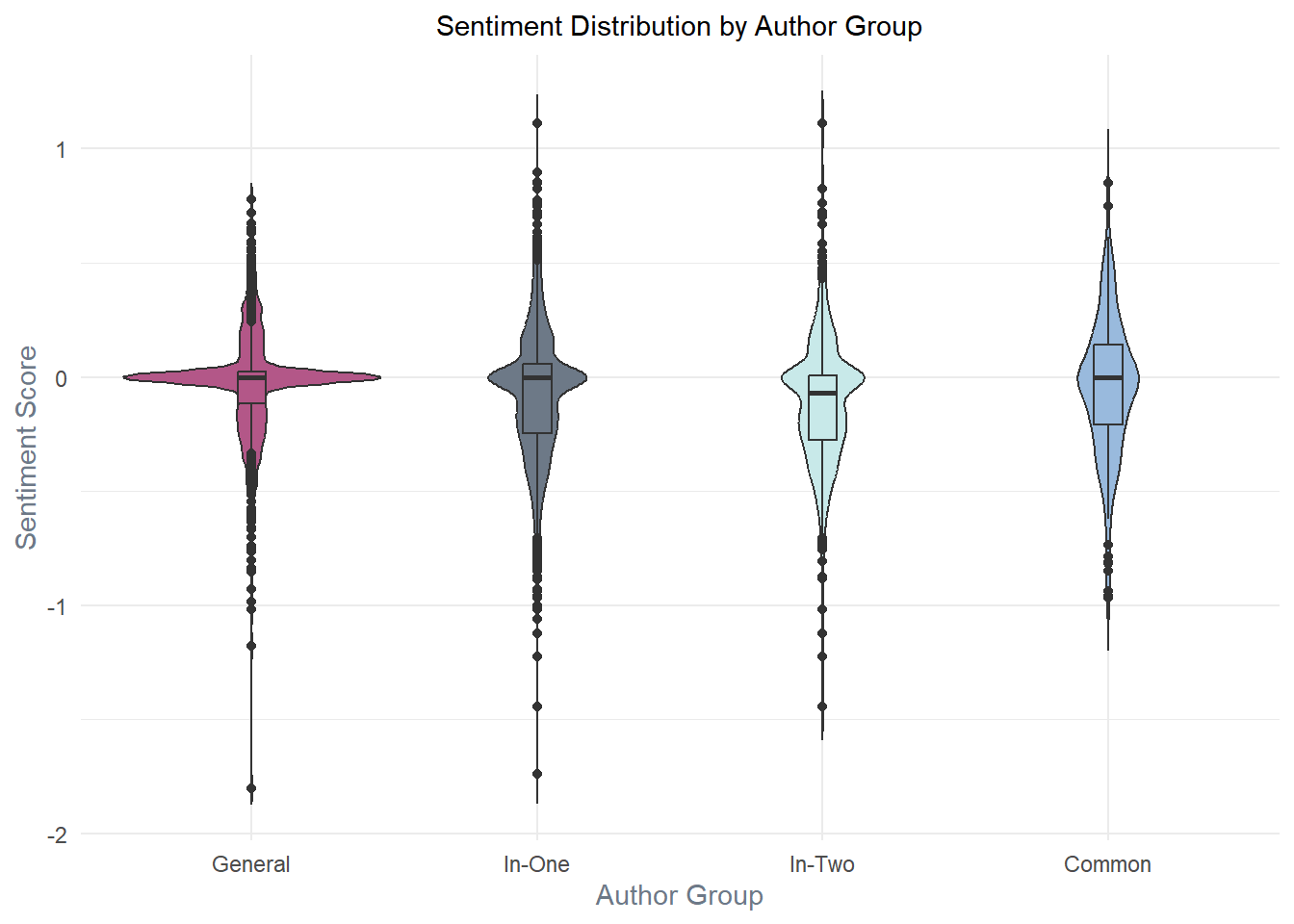

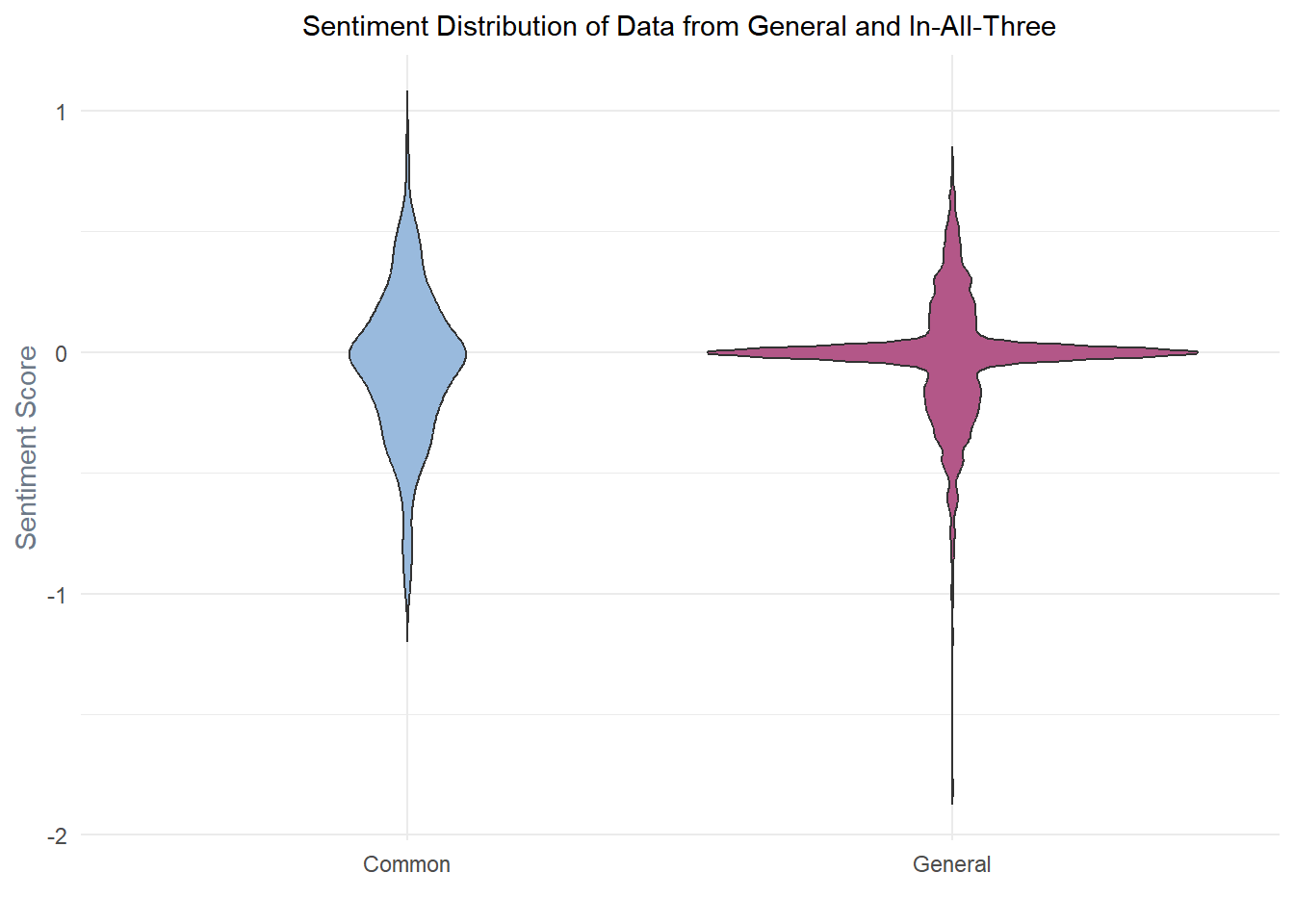

Figure 2 shows violin and internal box plots of the sentiment scores (scored from -1 to 1). Each plot represents a specific group: General, In-One, In-Two, and Common. General is a sample collected using the Israel-Hamas dataset keywords with no filter, meaning it collected all tweets that matched one keyword. The collection took place on October 7 and October 13. The tweets were collected without selection beyond the keywords, giving a better idea of the range of views and sentiments expressed on a specific topic.

General), authors with one high-performance tweet (In-One), authors with high-performance tweets on two topics (In-Two), and accounts that had high-performance tweets in all three datasets (Common).

When more accounts express a similar sentiment, that graph will appear wider. As it narrows, that indicates fewer posts with that sentiment score.

- The

Generalgroup displays a relatively uniform distribution, with a concentration around the median, which appeared near or at zero. The concentration around zero means that the most common sentiment score was neutral or near-neutral. - The

In-Onegroup, which reflects posts from authors with a high-performance tweet in only one of the three topics, has a less pronounced concentration around neutral sentiment, although it is still the most common. - The

In-Twogroup has a similar pattern toIn-Onebut with a slightly narrower peak, suggesting a bit less concentration around the median. Here, the scores are slightly more polar, with fewer neutral posts. - The

Commongroup shows a somewhat bi-modal distribution, with peaks near the median and lower sentiment scores. This pattern suggests the presence of two distinct subgroups within this category: one with neutral sentiment and another with more negative sentiment.

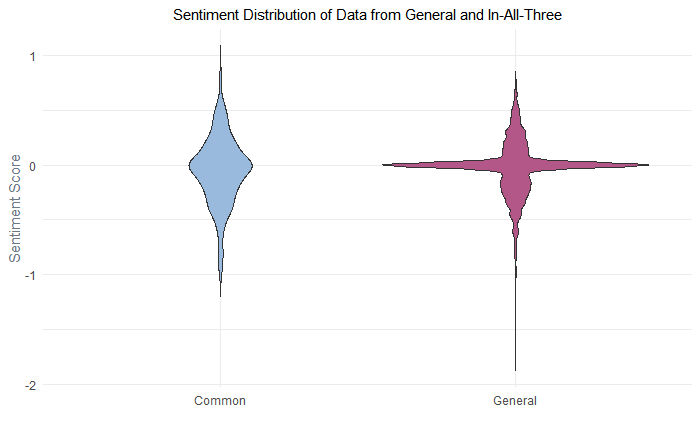

Differences were greatest between the General and Common groups.

library(ggplot2)Warning: package 'ggplot2' was built under R version 4.3.2library(grid)

# Combine the data frames

plot_data <- rbind(df_common_all, df_common_two, df_single, df_general)

# Reorder the factor levels of 'author_group'

plot_data$author_group <- factor(plot_data$author_group,

levels = c("General", "In-One", "In-Two", "Common"))

brand_colors <- c("General" = "#B35788", "In-One" = "#6D7987", "In-Two" = "#C8E9E9", "Common" = "#99BADD")

# Adjust the annotation_custom() function call with these variables

p <- ggplot(plot_data, aes(x = author_group, y = sentiment, fill = author_group)) +

geom_violin(trim = FALSE) +

geom_boxplot(width = 0.1) +

scale_fill_manual(values = brand_colors) +

labs(title = "Sentiment Distribution by Author Group",

x = "Author Group",

y = "Sentiment Score") +

theme_minimal() +

theme(legend.position = "none", # Hiding the legend as the labels are self-explanatory

plot.background = element_rect(fill = "white", color = NA), # Your brand background color

panel.background = element_rect(fill = "white", color = NA), # Consistency with plot background

text = element_text(color = "#6D7987"), # Your brand text color

axis.title = element_text(size = 11, color = "#6D7987"), # Adjusting size and color of axis titles

plot.title = element_text(hjust = 0.5, color = "black", size = 11) # Centering and setting the title color and size

)

# Display the plot

print(p)

library(ggplot2)

# Extracting the 'sentiment' columns from each data frame

sentiments_common_all <- df_common_all$sentiment

sentiments_general <- df_general$sentiment

# Creating the combined data frame

combined_data <- data.frame(

sentiment = c(sentiments_common_all, sentiments_general),

group = c(rep("Common", length(sentiments_common_all)),

rep("General", length(sentiments_general)))

)

brand_colors <- c("Common" = "#99BADD", "General" = "#B35788")

ggplot(combined_data, aes(x = group, y = sentiment, fill = group)) +

geom_violin(trim = FALSE) +

scale_fill_manual(values = brand_colors) +

labs(title = "Sentiment Distribution of Data from General and In-All-Three",

x = "",

y = "Sentiment Score") + # Added a label for clarity, modify as needed

theme_minimal() +

theme(

legend.position = "none", # Hiding the legend as the labels are self-explanatory

plot.background = element_rect(fill = "white", color = NA), # Your brand background color

panel.background = element_rect(fill = "white", color = NA), # Consistency with plot background

text = element_text(color = "#6D7987"), # Your brand text color

axis.title = element_text(size = 11, color = "#6D7987"), # Adjusting size and color of axis titles

plot.title = element_text(hjust = 0.5, color = "black", size = 11) # Centering and setting the title color and size

)

[1] "Common sentiments - Mean: -0.0533711331844772 Median: 0 Mode: 0 Standard Deviation: 0.309462099890748"[1] "General sentiments - Mean: -0.0261762691433522 Median: 0 Mode: 0 Standard Deviation: 0.244751182937714"Narratives and Themes

The themes across the three datasets (Israel-Hamas, Ukraine, and Vaccine) share several similarities, particularly in the areas of distrust, conspiracy theories, polarization, and calls for action. However, they also have distinctive elements rooted in the specific contexts of each topic.

Similarities:

Distrust and Conspiracy Theories: High-performance tweets across the three datasets carried a strong current of distrust towards authorities or established systems. Whether it was skepticism towards the US administration and global institutions (Israel-Hamas), doubt over financial aid (Ukraine), or mistrust in COVID-19 vaccines and health authorities (Vaccine), distrust was there. Conspiracy theories were common in all three categories. The stories suggested hidden agendas or concealed truths in global politics, financial aid, or public health initiatives.

Polarization and ‘Us vs. Them’ Mentality: The “us vs. them” narrative is a common thread, creating divisions between different groups. This is seen in the Israel-Hamas context’s polarization between different geopolitical factions. With Ukraine, the discussion was more about internal and international political divisions. In the Vaccine dataset, this manifested in a division between those who trust the vaccines and health authorities from those who don’t.

Calls for Action Against Injustice: Across all topics, there’s a strong call to action and a sense of urgency to address perceived injustices. This might involve challenging leadership decisions, questioning financial aid, or demanding transparency and safety in public health interventions.

Global Impact: Each theme acknowledges the broader, global implications of the issues at hand, whether it’s international conflict, financial decisions affecting multiple countries, or a worldwide public health crisis.

Differences:

Specific Focus of Distrust and Conspiracy Theories: While distrust and conspiracy theories are common, their focus varies. In the Israel-Hamas dataset, conspiracy theories revolve around geopolitical conflict and international relations. Related to Ukraine, they’re more about financial aid and corruption. For the Vaccine dataset, stories concentrate on public health, vaccine safety, and the potential ulterior motives of global health authorities.

Cultural and Ethical Considerations: The Israel-Hamas dataset uniquely highlights the role of religious and ethical considerations in shaping narratives. This aspect is less prominent in the Ukraine and Vaccine datasets, which are more politically and scientifically oriented.

Nature of Polarization: The nature of the “us vs. them” mentality also differs. In the Israel-Hamas context, it’s largely geopolitical. In the Ukraine theme, it’s tied to national interests versus international aid, sometimes it is tied to morality or friendship. Vaccine discussion is more about differing beliefs in science and authority.

Type of Action Advocated: The kind of action advocated varies, from military intervention (Israel-Hamas) to financial accountability and anti-corruption measures (Ukraine) to vaccine safety and informed consent (Vaccine).

Israel-Hamas Dataset Themes:

Conflict and Polarization: Focus on global unrest, power struggles, and a strong “us vs. them” narrative.

Distrust in Leadership and Institutions: Skepticism towards the US administration, global institutions, and leaders, coupled with a sense of abandonment.

Conspiracy and Misinformation: The prevalence of conspiracy theories and misinformation, especially regarding political decisions and global events.

Humanitarian Concern vs. Political Cynicism: Tension between the desire to assist in humanitarian crises and disillusionment with political forces.

National Identity and Patriotism: Discussions reflecting nationalism, patriotism, and feelings of betrayal due to international policies.

Religious and Ethical Aspects: The significant role of religious and ethical considerations in evaluating global and domestic issues.

Social Activism and Public Opinion: Active societal involvement in activism, both genuine and performative, strongly emphasizing direct engagement and accountability.

Injustice and Moral Outrage: Express moral outrage at perceived injustices and empathy towards marginalized groups.

Grievance Culture: A prevalent sense of grievance, promoting a country-first mentality and social divisions.

Global Uncertainty and Anxiety: An underlying anxiety about the future, manifested through references to global events.

Ukraine Dataset Themes:

Conspiracy Theories and ‘Good vs. Evil’: Narratives often delve into conspiracy theories and present the world in binary terms.

Injustice and Demand for Action: A strong sense of injustice and calls for urgent action to rectify perceived wrongs.

Financial Aid Distrust: Skepticism about financial aid to foreign countries, suggesting possible corruption or mismanagement.

Conspiracy and Self-Interest: Themes of conspiracy, hidden motives, and self-interest among leaders.

Hero vs. Villain Narrative: A clear demarcation between perceived “heroes” and “villains” in the public discourse.

Injustice and Urgency for Action: An urgent call for action to address perceived injustices.

Divisive Mentality: A strong “us vs. them” mentality within societal and political contexts.

International Consequences: Recognition of the global implications of financial and political decisions.

Vaccine Dataset Themes:

Vaccine Distrust and Authority Questioning: Deep mistrust in COVID-19 vaccines and questioning of the authorities involved in their development and distribution.

Conspiracy and Secrecy: The spread of conspiracy theories involving global authorities and vaccines.

Good vs. Evil Depiction: Framing the vaccine debate as a struggle between benevolent and malevolent forces.

Perceived Injustice and Calls for Action: A strong sense of injustice related to vaccines and calls for public action.

Divisive Thinking: Prominent “us vs. them” attitudes in vaccine narratives.

Global Impact and Variations: Acknowledgment of the global aspect of the vaccine debate and varying international approaches.

Statistical Significance

Statistical significance testing can help determine which findings are mere chance and which reflect a real difference.

The results paint a picture: authors who posted about all three issues — Israel-Hamas, Ukraine, and vaccines — had an average sentiment score that was distinct from the general data. Specifically, their posts were significantly more negative.

Wilcoxon Rank-Sum Test

The Wilcoxon rank-sum test helps us compare two separate groups to see if their scores tend to differ. It’s especially useful when you can’t assume the data follow a typical bell-shaped curve.

Here’s a breakdown of the test:

Null Hypothesis (H0): There’s no noteworthy difference in sentiment between the two groups.

Alternative Hypothesis (H1): One group’s sentiments are consistently different than the others’.

The p-value tells the likelihood of seeing the data in this report if the Null Hypothesis were true:

Small p-value (≤ 0.05): The difference is probably not due to chance.

Large p-value (> 0.05): Any difference might just be a coincidence.

The test gave us a p-value of approximately 0.00008912, confidently indicating a real difference in sentiment scores between the common authors and the general group.

Kruskal-Wallis Test

When there are more than two groups, you can use the Kruskal-Wallis test.

- Data: Sentiment scores were compared across several groups.

- Kruskal-Wallis chi-squared: The result was 33.437, indicating a noticeable difference between groups.

- p-value: The p-value is exceptionally small (5.486e-08), suggesting a real difference.

The test suggests that the sentiment scores truly differ across groups — and that’s not something we’d expect to see by chance.

# R code for statistical tests

library(dplyr)

library(readr)Warning: package 'readr' was built under R version 4.3.2common_authors <- Reduce(intersect, list(unique(israel$author), unique(ukraine$author), unique(vaccine$author)))

combined_data <- rbind(sentiment_israel, sentiment_ukraine, sentiment_vaccine)

common_authors_data <- combined_data[combined_data$author %in% common_authors, ]

sentiments_common <- common_authors_data$sentiment

sentiments_general <- general$sentiment

wilcox_result <- wilcox.test(sentiments_common, sentiments_general, paired = FALSE)

print(wilcox_result)

Wilcoxon signed rank test with continuity correction

data: sentiments_common

V = 45603, p-value = 8.912e-05

alternative hypothesis: true location is not equal to 0impression_general <- general$impression_count

impression_israel <- israel$impression_count

mwu_result_impression <- wilcox.test(impression_general, impression_israel)

print(mwu_result_impression)

Wilcoxon rank sum test with continuity correction

data: impression_general and impression_israel

W = 21150, p-value < 2.2e-16

alternative hypothesis: true location shift is not equal to 0like_general <- general$like_count

like_israel <- israel$like_count

mwu_result_like <- wilcox.test(like_general, like_israel)

print(mwu_result_like)

Wilcoxon rank sum test with continuity correction

data: like_general and like_israel

W = 18200, p-value < 2.2e-16

alternative hypothesis: true location shift is not equal to 0sentiment_scores_israel <- data.frame(scores = sentiment_israel$sentiment, group = "israel")

sentiment_scores_ukraine <- data.frame(scores = sentiment_ukraine$sentiment, group = "ukraine")

sentiment_scores_vaccine <- data.frame(scores = sentiment_vaccine$sentiment, group = "vaccine")

combined_sentiment <- rbind(sentiment_scores_israel, sentiment_scores_ukraine, sentiment_scores_vaccine)

combined_sentiment$group <- as.factor(combined_sentiment$group)

kw_result <- kruskal.test(scores ~ group, data = combined_sentiment)

print(kw_result)

Kruskal-Wallis rank sum test

data: scores by group

Kruskal-Wallis chi-squared = 33.437, df = 2, p-value = 5.486e-08Limitations

No study is perfect. Here are some factors that might have nudged the results:

Keyword Selection: If the keyword search was missing popular keywords, that could affect the results.

Timing: When the data were collected matters, especially given the practice of ephemeral tweeting. Public opinion shifts over time, especially in response to real-world events. To accommodate for this, the window for tweets spanned two weeks.

Sample Representativeness: The “general” dataset is unlikely to reflect the broader population perfectly. It’s based on what was accessible, not a random sampling of all social media users.

Cultural Differences: Sentiments can be expressed differently across cultures, potentially skewing the interpretation of sentiment scores.

Language Nuances: Sarcasm, humor, and local slang are hard to interpret, even for humans, and might affect sentiment analysis.

Appendix

Definitions and Terms

- Author Groups

-

Categories of authors classified based on specific criteria related to their posting activity or the content they share. In this context, there are four groups: General, Single, Common-Two, and Common-All.

- Common-Authors or Common_Authors_Data

-

Data containing the group of authors that appeared in all three datasets: Israel-Hamas, Ukraine, and Vaccine.

- Data Visualization

-

A graphical representation that displays the sentiment scores across four distinct author groups. It combines violin plots and internal box plots to show the distribution and density of sentiment scores.

- General (Group)

-

When capitalized this represents a specific dataset in this report. Data for this group was collected using the Israel-Hamas dataset keywords with no filter, meaning it collected all tweets that matched one keyword. The collection took place on October 7 and October 13. The tweets were collected without selection beyond the keywords. Tweets that received more than 20,000 likes (7 tweets) were removed from the dataset.

- High-Performance

-

When referencing tweets, high performance means a tweet matched the Israel-Hamas keywords and received 20,000 likes or more, or it matched the keywords for Ukraine or vaccines and received 1,000 or more likes.

- Median

-

In the context of this data, it refers to the middle sentiment score in the distribution, with an equal number of scores more negative and more positive. A peak at the median in sentiment distribution suggests a high occurrence of neutral sentiments.

- Most Relevant Voices

-

In the context of a crisis, InfoEpi Lab defines the most relevant voices as credible media, community leaders and citizens from the affected area, relevant experts, humanitarian workers, and elected officials or agencies involved in responding to the situation.

- Sentiment Distribution

-

The spread of sentiment scores within the collected data, shows how frequently various sentiments, from negative to positive, are expressed in the posts.

- Sentiment Scores

-

Numerical scores ranging from -1 to 1, assigned to posts to represent the sentiment expressed. A score of -1 indicates a highly negative sentiment, 0 is neutral, and 1 is highly positive.

- In-One (Group)

-

A group of accounts that had at least one high-performance tweet in one category across the three datasets.

- In-Two

-

Authors that had high-performance tweets in more than one category.

- Trio-Authors

-

Term for the group of authors that appeared in all three datasets: Israel-Hamas, Ukraine, and Vaccine.

- Violin Plots

-

A method of data visualization that shows the distribution of data and its probability density. The width of the plot represents the frequency of sentiments, with a wider section indicating a higher occurrence of that particular sentiment score.

Variables

Keywords and Filters For Datasets

| Dataset | Keywords | Filter |

|---|---|---|

| Israel-Hamas | “israel,” “hamas,” “palestine,” “gaza” | 20,000 likes |

| Ukraine | “ukraine,” “ukrainians,” “mariupol,” “odesa,” “odessa,” “dpr,” “lnr,” “lpr,” “luhansk,” “lugansk,” “lviv,” “donbass,” “donbas,” “kharkiv,” “kherson,” “kiev,” “kyiv,” “crimea,” “chernobyl,” “right sector,” “ukronazi,” “sevastopol,” “rada,” “reznikov,” “zelenskyy,” “zelensky,” “zelenski,” “maidan,” “oblast,” “mykolaiv,” “azov,” “denazify,” “denazification” | 1,000 likes |

| Vaccine | “vaccine,” “vaccination,” “Pfizer,” “mRNA,” “diedsuddenly” | 1,000 likes |

Variables For Author Count Tables

| Variable | Description |

|---|---|

| author | The Twitter username of the author. |

| total_retweets | The total number of retweets for the author’s tweets. |

| total_likes | The total number of likes (favorites) for the author’s tweets. |

| total_quotes | The total number of times the author’s tweets were quoted. |

| total_replies | The total number of replies received on the author’s tweets. |

| total_engagements | The total engagement count, sum of retweets, likes, quotes, replies. |

| total_impressions | The total impressions of the author’s tweets, potential reach or views. |

Packages required for this analysis

library(dplyr)

library(tidytext)

library(ggplot2)

library(dygraphs)

library(flexdashboard)

library(sentimentr)Account Engagement from Israel-Hamas Data

# View the top authors

datatable(top_authors_israel)Israel-Hamas Dataset: This dataset was collected from Twitter on October 20, 2023. The data start on October 7, 2023. Keywords for the search query: “israel,” “hamas,” “palestine,” and “gaza,” with an additional filter set to capture posts with a minimum of 20,000 likes.

Account Engagement from Ukraine Dataset

Ukraine Dataset: Keywords for the search query related to the Russian invasion of Ukraine, with a focus on Ukraine, “ukraine” OR “ukrainians” OR “mariupol” OR “odesa” OR “odessa” OR “dpr” OR “lnr” OR “lpr” OR “luhansk” OR “lugansk” OR “lviv” OR “donbass” OR “donbas” OR “kharkiv” OR “kherson” OR “kiev” OR “kyiv” OR “crimea” OR “chernobyl” OR “right sector” OR “ukronazi” OR “sevastopol” OR “rada” OR “reznikov” OR “zelenskyy” OR “zelensky” OR “zelenski” OR “maidan” OR “oblast” OR “mykolaiv” OR “azov” OR “denazify” OR “denazification” with an additional filter to limit posts to those with 1,000 likes or more.

Account Engagement from Vaccine Dataset

Vaccine Dataset: Keywords for this dataset included terms strongly associated with vaccine discussion online, “vaccine” OR “vaccination” OR “Pfizer” OR “mRNA” OR “diedsuddenly” with a filter set to exclude tweets that received fewer than 1,000 likes.

Narratives

Analyzing the narratives from the provided texts revealed several themes and tropes that can be identified and structured uniformly across the three different datasets: Israel-Hamas, Ukraine, and Vaccine.

The narratives bear common themes of distrust, polarization, conspiracy theories, and calls for action. These stories reflect societal anxieties and deep-seated suspicions towards authorities and established systems, emphasizing a global struggle between perceived good and evil, truth and misinformation, and justice and corruption.

Israel-Hamas Dataset

Conflict and Polarization:

The messages heavily focus on conflicts involving Israel, Palestine, the broader Middle East, and Ukraine.

These stories draw parallels with domestic US issues, highlighting global unrest and instability. The Middle East and Ukraine situations are portrayed as global power struggles.

There’s a strong “us vs. them” narrative, with a clear division between perceived “good” and “evil” forces. This polarized view extends to domestic politics, framing issues in terms of stark opposition without much room for nuance or middle ground.

Distrust in Leadership and Institutions:

Much of the content expresses distrust in the US administration, global institutions, and leaders. They are often accused of incompetence, having ulterior motives, or being disconnected from the realities and needs of the general populace.

The narratives also suggest a perception of abandonment, where leaders prioritize international fame or diplomatic victories over their citizens’ immediate needs and security.

There’s also skepticism about the motives and actions of global institutions and other governments, with suggestions of hidden agendas and criticism of perceived inaction or counterproductive actions.

Conspiracy and Misinformation:

Several messages explore conspiracy theories, suggesting hidden agendas behind political decisions, especially involving foreign aid, diplomatic relations, and conflict resolutions.

These include accusations of undisclosed financial motives, secret alliances, and deliberate provocations of conflict. However, these interpretations and accusations lack evidence and rely on speculative or sensationalist claims.

Factual events are frequently distorted or presented without context, aiming to drive fear, confusion, and further polarization among the public. Hyperpartisan or misleading interpretations contribute to the idea of contested or manipulated “truth.”

Humanitarian Concern vs. Political Cynicism:

Many express concern over humanitarian crises like Gaza and the West Bank. However, there is cynicism about the political forces involved, with claims of misused or insufficient aid.

There is tension between the desire to help and disillusionment with entities responsible for providing aid.

National Identity and Patriotism:

Nationalism and patriotism are evident, especially in messages about US financial and military aid to other countries.

There is a sense of betrayal that the US is neglecting its citizens for international involvement.

Discussions about tax dollars reflect economic patriotism and a sense of ownership over national resources.

Religious and Ethical Aspects:

Religious language and imagery play a prominent role, especially in discussions about the Israeli-Palestinian conflict. This indicates that these issues are seen as more than political or territorial; they are considered moral and existential.

Ethical considerations are also present in discussions about leadership. Leaders are often evaluated based on their policies, perceived moral standing, and ethical behavior.

Social Activism and Public Opinion:

The narratives depict a society actively involved in both online and offline activism. There are references to protests, public opinion, and the influence of social media in shaping and amplifying these views.

In addition to genuine activism, there is a performative aspect, where support for various causes may be driven by trends, partisan groups, public pressure, or the desire for social validation rather than deep-rooted commitment.

Amidst the criticism and outrage, there are clear calls for action and accountability. These narratives encourage direct engagement, whether through protest, raising awareness, or holding leaders responsible. They reflect a desire for change and a proactive stance against current affairs.

Injustice and Moral Outrage:

Many messages express moral outrage at perceived injustices, especially in discussions about the Israeli-Palestinian conflict. Actions are condemned as genocidal, and financial decisions are viewed as corrupt or harmful to the public interest.

Empathy towards the underdog, victims, or marginalized groups is evident, along with a demand to acknowledge their struggles and condemn their oppressors.

Grievance Culture

A strong sense of grievance promotes a country-first mentality, especially regarding the US. This is evident in criticisms of foreign aid while domestic issues are neglected.

Items unrelated in spending (e.g. defense vs. social welfare programs) are falsely framed as competing with each other, leading to self-interest and ignoring the strategic or defensive benefits of certain decisions and policies.

Strong identification with specific groups or beliefs can foster an ‘us versus them’ mentality, creating social divisions based on differences rather than common goals or shared experiences.

Global Uncertainty and Anxiety:

- Posts express an underlying anxiety about the future, repeatedly mentioning events like wars, resignations, and protests.

Ukraine Dataset

The Ukraine dataset narratives often explore conspiracy theories, depicting a world in stark ‘good vs. evil’ terms. They convey a strong sense of injustice and a demand for immediate action to address perceived wrongs. These stories express considerable skepticism about the handling of foreign aid by the government, suggesting corruption and conflicting national priorities.

These narratives also underscore a prevalent ‘us versus them’ mentality, indicating a divided world. Beyond merely identifying this split, the discussions acknowledge that the financial and political choices involved have global consequences. This points to a public that is actively engaged and worried about their nation’s international interactions and internal governance.

Financial Aid Distrust and Government Decisions:

There’s a common narrative of doubt and unhappiness concerning financial aid to foreign countries, especially Ukraine. These texts suggest these financial choices are poorly handled, with money not going to its intended uses or not serving the American people.

Stories focus on the significant amounts allocated to Ukraine, challenging the transparency and responsibility of these dealings. They imply that this financial assistance is mishandled, favoring corrupt officials, or diverted from urgent domestic matters in the US.

Some posts imply intentional mismanagement or corruption among leaders in the US and recipient nations, alleging they place foreign conflicts above domestic needs.

Conspiracy and Self-Interest:

Several narratives touch on conspiracy theories, hinting at organized attempts by political groups or elites to redirect public funds for personal profit or hidden motives under the pretense of foreign aid. There’s a repeated theme of concealed intentions behind the considerable aid, particularly to Ukraine, and an absence of tangible results from this spending.

This storyline expands to include public figures and organizations in these conspiracies, with individuals like Ukrainian President Zelenskyy often cited in connection with wasted or misused “taxpayer money.”

Hero vs. Villain Narrative:

Texts frequently present the situation as a battle between the honest, industrious public and the corrupt, self-interested political elite. Those questioning the aid or pointing out fund mismanagement are depicted as public allies, while those supporting or profiting from the aid are portrayed negatively.

There’s a story of enlightenment versus ignorance, where those challenging the financial choices are viewed as informed, while those who aren’t are seen as either involved or naively unaware of the ongoing misappropriation.

Injustice and Urgency for Action:

The narratives are threaded with a potent sense of injustice, with a sentiment that the misdirection of funds and focus on foreign aid significantly detracts from domestic concerns. Calls for reallocating resources to local issues, especially health, security, and economic matters, are common.

Many stories urge action, commonly through public protest, political engagement, or raising awareness about the suspected mismanagement of funds. These calls carry a sense of emergency and a moral obligation to “wake up” or hold leaders “accountable.”

Divisive Mentality:

The stories encourage a distinct “us vs. them” mindset, setting the public against political elites and policymakers. This conflict is depicted as a fight between communal welfare and the selfish desires of those in power.

This divide reaches into international politics, with some countries or groups shown as unfairly benefiting from misguided US priorities, often at the American people’s expense.

International Consequences and Differences:

The narratives recognize the global implications of these financial decisions, mentioning various countries and international scenarios. Different nations are shown as unjustly profiting from US aid or as participants in global conflicts that the US shouldn’t engage in.

The international backdrop is employed to emphasize perceived injustices or corrupt activities, indicating that these issues have extensive effects and influence international relations and leadership perception.

Vaccine Dataset

The vaccine-related tweets illustrate profound mistrust, anxiety, and suspected wrongdoing concerning COVID-19 vaccines and the parties overseeing their creation and distribution. The stories connect to larger issues of authority, transparency, and individual versus institutional roles in health decisions.

Vaccine Distrust and Authority Questioning:

Posts express serious doubt about the safety and effectiveness of COVID-19 vaccines, particularly mRNA vaccines. This doubt extends to the institutions endorsing them, like governments, health bodies, and pharmaceutical firms.

Narratives report severe adverse reactions and fatalities post-vaccination, stressing the perceived dangers of mRNA technology. They suggest that health issues, referred to as “VAIDS” in some texts, are common but intentionally ignored or hidden by those in power.

Some posts allege deliberate wrongdoing by vaccine producers, including concealing information about vaccine ingredients or potential health hazards.

Conspiracy and Secrecy:

Several stories entertain conspiracy theories, alluding to a coordinated effort by global authorities or groups to hide vaccine truths or use them for sinister ends. Recurring themes include hidden plans, such as vaccines having unknown components, ties to technology (like 5G), or even being instruments for population control.

The narrative accuses public figures and organizations of involvement in these conspiracies, with names like Bill Gates often mentioned. References to “predictive programming” and past events (like autism’s portrayal in media) suggest beliefs in long-term schemes by influential parties.

Good vs. Evil Depiction:

Some texts starkly frame the situation as a battle between benevolence and malevolence. Figures challenging or resisting vaccination initiatives, such as Robert Kennedy Jr. or certain officials, are seen as courageous truth-seekers or public defenders, while pro-vaccine individuals and groups are frequently portrayed as harmful or corrupt.

There’s a theme of martyrdom or persecution, where those questioning vaccine safety purportedly face suppression, mockery, or even fatal outcomes, as the story of a senator’s plane crash suggests.

Perceived Injustice and Calls for Action:

A robust sense of injustice pervades, with stories indicating individuals are harmed by vaccines but encounter resistance in seeking justice. This is apparent in stories about legal struggles, compensation efforts, and alleged corporate attempts to avoid responsibility.

Numerous texts call for action, through legal channels, public demonstrations, or informing others about the purported vaccine risks. These calls transmit a sense of urgency and ethical responsibility to “resist” or hold accountable those involved.

Divisive Thinking:

The narratives promote a pronounced “us vs. them” attitude, where vaccine skeptics and those questioning official COVID-19 responses confront a seemingly unified, distrustful, or malicious establishment.

This division extends beyond the public-establishment dichotomy to societal splits, highlighting partisan views on vaccination and descriptions of those with opposing opinions on vaccines or health measures.

Global Impact and Variations:

The global aspect of the vaccine debate is acknowledged, with references to various nations and international organizations.

Different countries are depicted as having diverse approaches to vaccine deployment, acceptance, and mandates, which are either criticized or applauded based on the narrative’s stance.

Updates

- Updated October 25, 2023 to clarify wording and to correct a formatting error.

Citation

@article{infoepi_lab2023,

author = {InfoEpi Lab},

publisher = {Information Epidemiology Lab},

title = {Negative and {Misleading} {Posts} {Driving} {Critical}

{Discussions} on {X}},

journal = {InfoEpi Lab},

date = {2023-10-24},

url = {https://infoepi.org/posts/2023/10/24-israel-ukraine-vaccines.html},

doi = {10.7910/DVN/WRAQ4N},

langid = {en}

}