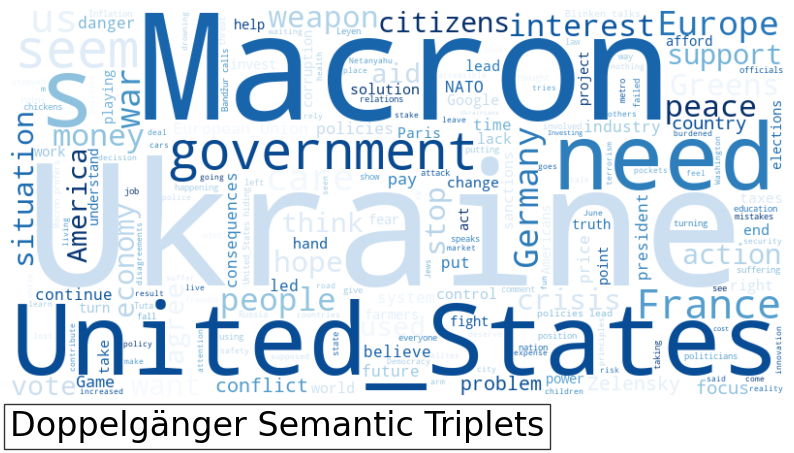

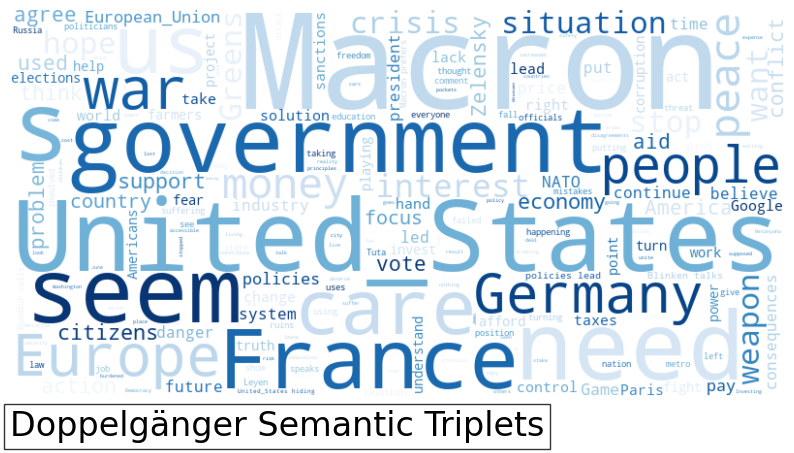

Top Words Among Semantic Triplets in Tweets

This section presents visual analyses of the most frequent words found in semantic triplets from tweets collected between April 23 and May 3, 2024. The first image, Figure 1, displays a word cloud highlighting these top words, giving a clear and immediate visual representation of the most dominant themes. The second image, Figure 2, removes the most frequently occurring word, “Ukraine,” from the visualization.

This adjustment allows for a more detailed exploration of the other significant but less dominant topics present in the dataset, providing a broader view of the conversation dynamics during this period. The methodology for these graphics can be found here: Doppelgänger Tweets spaCy Analysis.

Methodology

Install spaCy

!pip install spacy

!python -m spacy download en_core_web_lg

import spacy

nlp = spacy.load("en_core_web_lg")Extract Semantic Triplets

import spacy

import pandas as pd

from collections import defaultdict

import os

# Load the SpaCy model

nlp = spacy.load("en_core_web_lg")

# This function does not read triplets from a file; it extracts triplets from a sentence using SpaCy.

def extract_triplets(sentence):

doc = nlp(sentence) # Process the sentence with SpaCy

triplets = []

for token in doc:

if "subj" in token.dep_:

subject = token.text

for verb in token.head.children:

if verb.dep_ in ("aux", "relcl"):

predicate = verb.head.text

else:

predicate = token.head.text

for obj in verb.children:

if "obj" in obj.dep_:

triplets.append((subject, predicate, obj.text))

return triplets

file_path = 'text.txt'

if not os.path.exists(file_path):

print(f"File not found: {file_path}")

exit(1)

# Read the file and process each line

all_triplets = []

subject_counts = defaultdict(int)

predicate_counts = defaultdict(int)

object_counts = defaultdict(int)

with open(file_path, 'r', encoding='utf-8') as file:

headlines = file.readlines()

for headline in headlines:

triplets_from_headline = extract_triplets(headline.strip()) # strip() removes leading/trailing whitespace

all_triplets.extend(triplets_from_headline)

for triplet in triplets_from_headline:

subject, predicate, obj = triplet

subject_counts[subject] += 1

predicate_counts[predicate] += 1

object_counts[obj] += 1

print(all_triplets)

top_subjects = sorted(subject_counts.items(), key=lambda x: x[1], reverse=True)[:10]

top_predicates = sorted(predicate_counts.items(), key=lambda x: x[1], reverse=True)[:10]

top_objects = sorted(object_counts.items(), key=lambda x: x[1], reverse=True)[:10]

print("Top Subjects:", top_subjects)

print("Top Predicates:", top_predicates)

print("Top Objects:", top_objects)

# Convert the list of triplets to a DataFrame

df_triplets = pd.DataFrame(all_triplets, columns=['Subject', 'Predicate', 'Object'])

# Save the DataFrame to a CSV file

df_triplets.to_csv('no_crypto_triplets_output.csv', index=False)Find Common Subjects, Predicates, Objects

!pip install nltk

!nltk.download('wordnet')

import pandas as pd

import nltk

from nltk.stem import PorterStemmer

from nltk.corpus import wordnet

from collections import defaultdict

# Load the CSV into a DataFrame

df_triplets = pd.read_csv("no_crypto_triplets_output.csv")

# Extract most frequent subjects, predicates, and objects

top_subjects = df_triplets['Subject'].value_counts().to_dict()

top_predicates = df_triplets['Predicate'].value_counts().to_dict()

top_objects = df_triplets['Object'].value_counts().to_dict()

# Initialize the stemmer

ps = PorterStemmer()

# Function to get synonyms of a word

nltk.download('wordnet')

def get_synonyms(word):

synonyms = set()

for syn in wordnet.synsets(word):

for lemma in syn.lemmas():

synonym = lemma.name().replace('_', ' ') # replace underscores with spaces

synonyms.add(synonym)

synonyms.add(ps.stem(synonym))

return synonyms

# Stemming and grouping synonyms for subjects, predicates, and objects

def stem_and_group_synonyms(words):

stemmed_grouped = defaultdict(int)

for word, count in words.items():

stemmed_word = ps.stem(word)

if stemmed_word not in stemmed_grouped:

stemmed_grouped[stemmed_word] = 0

stemmed_grouped[stemmed_word] += count

# Group synonyms

synonym_grouped = defaultdict(int)

for word, count in stemmed_grouped.items():

synonyms = get_synonyms(word)

if synonyms:

key = min(synonyms, key=len) # Use the shortest synonym as the key

else:

key = word

synonym_grouped[key] += count

return synonym_grouped

# Re-analyze the words after stemming and grouping by synonyms

grouped_subjects = stem_and_group_synonyms(top_subjects)

grouped_predicates = stem_and_group_synonyms(top_predicates)

grouped_objects = stem_and_group_synonyms(top_objects)

sorted_subjects = sorted(grouped_subjects.items(), key=lambda x: x[1], reverse=True)

sorted_predicates = sorted(grouped_predicates.items(), key=lambda x: x[1], reverse=True)

sorted_objects = sorted(grouped_objects.items(), key=lambda x: x[1], reverse=True)

sorted_subjects, sorted_predicates, sorted_objectsCreate Word Cloud

!pip install WordCloud

import pandas as pd

from wordcloud import WordCloud, STOPWORDS

import matplotlib.pyplot as plt

from matplotlib.patches import Rectangle

# Load the data from the CSV file

df_triplets = pd.read_csv('no_crypto_triplets_output.csv') # Adjust the path if necessary

# Combine all the text data from the triplets into a single string

text = ' '.join(df_triplets['Subject'].fillna('') + ' ' +

df_triplets['Predicate'].fillna('') + ' ' +

df_triplets['Object'].fillna(''))

# Function to plot word cloud

def plot_wordcloud(text, stopwords=None, remove_top_word=False, file_name="no_crypto_word_cloud.png"):

if remove_top_word:

# Remove the most frequent word

frequency = WordCloud().process_text(text)

most_common_word = max(frequency, key=frequency.get)

stopwords = stopwords if stopwords else set()

stopwords.add(most_common_word)

# Create and configure the WordCloud

wordcloud = WordCloud(width=800, height=400,

background_color='white',

max_words=200,

colormap='Blues',

stopwords=stopwords).generate(text)

# Create a figure and plot space for the word cloud and the label

fig, ax = plt.subplots(figsize=(10, 6))

# Display the generated image:

ax.imshow(wordcloud, interpolation='bilinear')

ax.axis('off')

# Add a label

label = "Doppelgänger Semantic Triplets" # Replace with your desired label

ax.text(0, -0.1, label, fontsize=24, ha='left', transform=ax.transAxes, bbox=dict(facecolor='white', alpha=0.8))

# Save the image with the specified filename, ensuring the entire figure (including label) is saved

plt.savefig(file_name, bbox_inches='tight', pad_inches=1)

plt.show()

# Custom stopwords (if any)

custom_stopwords = set(STOPWORDS) # Add any custom stopwords here if needed

# Create the initial word cloud

plot_wordcloud(text, stopwords=custom_stopwords, file_name="no_crypto_word_cloud.png")

# Create the word cloud minus the top word

plot_wordcloud(text, stopwords=custom_stopwords, remove_top_word=True, file_name="no_crypto_word_cloud_without_top_word.png")Citation

@article{infoepi_lab2024,

author = {{InfoEpi Lab}},

publisher = {Information Epidemiology Lab},

title = {Doppelgänger {Tweets} {spaCy} {Analysis}},

journal = {InfoEpi Lab},

date = {2024-05-08},

url = {https://infoepi.org/posts/2024/05/08-doppelganger_spaCy.html},

langid = {en}

}