InfoEpi Lab, 2024, "Doppelgänger Series Data", https://doi.org/10.7910/DVN/UPEK5Z, Harvard Dataverse, V2.dTeam, a group of U.S. researchers who track foreign influence, assisted with data collection and analysis.

Automated accounts on X posting and boosting celebrity interviews with pro-Kremlin voiceovers appear to be the latest effort in the Doppelgänger Operation. The videos exemplify the use of distranslation. When someone intentionally–partially or wholly–mistranslates real-time conversation, text, audio, or video to deceive or cause harm, that is distranslation. It often, but not always, has a political motivation.

noun.

An intentionally mistranslated conversation, text, audio, or video, in part or whole, to deceive, manipulate, or cause harm.

A technique used to distort or manipulate information, akin to the relationship between mis- and disinformation, where the latter implies deliberate intent to mislead.

What Are the Key Messages?

The overarching message in these videos is that Russia is good and Ukraine is bad. Complementary false quotes also cast doubt on the reporting of current events, implying Ukraine is doing poorly with U.S. military aid or simply praising Vladimir Putin. The fake quotes boil down to four key ideas:

- I’m famous, and I heard Ukraine is bad.

- I’m famous, and I think Russia is great, or everyone loves Putin.

- I’m famous. Did you hear about this shocking, unethical, or terrible thing Ukraine did?

- I’m famous and believe this conspiracy theory (and so should you).

Who Is the Target Audience?

Non-English-speaking citizens of France: The French content is most likely aimed at French citizens whose government currently supports Ukraine. This audience is not new to this operation or broader pro-Kremlin efforts. France in particular has been targeted with an “overwhelming” volume of pro-Russian propaganda.

Non-English speaking citizens of Germany: Similarly, these videos are most likely designed to influence German citizens as their government currently supports Ukraine. Targeting Germany fits with the increasingly aggressive tactics against Germany, such as the recent leak of confidential calls between military officers.

AI Forensics published in April 2024 that Meta was failing to stop pro-Russian ads targeting Germany and France specifically, which further suggests a focus on these two audiences:

A specific pro-Russian propaganda campaign reached over 38 million users in France and Germany, with most ads not being identified as political in a timely manner.

What Is the Potential Aim of the Videos?

These videos echo other campaigns designed to erode support for Ukraine using celebrities. From different angles, the videos discourage support for Ukraine or portray Russia in a more favorable light.

The videos also encourage distrust in institutions such as media and democratic governments, which generates cynicism and discord. Discrediting media can be particularly dangerous because it can make an audience more receptive to misleading or false messages or less resilient when exposed to information manipulation.

About the Videos

Videos in this dataset were primarily in French and German. Celebrities to whom the pro-Kremlin claims were attributed include Matthew McConaughey, Billie Eilish, Rowan Atkinson, Zendaya, Mick Jagger, Danny DeVito, Domhnall Gleeson, and Ethan Hawke.

In December 2023, Clint Watts, writing for the Microsoft Blog, described exploitation of celebrities to spread pro-Kremlin messaging:

Unwitting American actors and others appear to have been asked, likely via video message platforms such as Cameo, to send a message to someone called “Vladimir”, pleading with him to seek help for substance abuse. The videos were then modified to include emojis, links and sometimes the logos of media outlets and circulated through social media channels to advance longstanding false Russian claims that the Ukrainian leader struggles with substance abuse. The Microsoft Threat Analysis Center has observed seven such videos since late July 2023, featuring personalities such as Priscilla Presley, musician Shavo Odadjian and actors Elijah Wood, Dean Norris, Kate Flannery, and John McGinley.

Although these tactics differ from others we have observed, using celebrities is a popular strategy that does not require AI. Wired, Byline Times, and the bot research group antibot4navany attributed similar distranslations and fake quotes to the Doppelgänger operation.

Tweets from these accounts exhibited the atypical engagement found in other Doppelgänger cases. The posts almost exclusively received retweets between 1300 and 1700 (See also Figure 1, Figure 2, Figure 3, Figure 4).

In each case, the accounts posted one prior post, and that tweet generally received no engagement. One praised Vitalik Buterin, a Russian-born Canadian and founder of Ethereum cryptocurrency, and another praised the Central African Republic for embracing cryptocurrency as a means of “financial liberation.” El Salvador received praise for a similar embrace of cryptocurrency in another account’s first post.

General Observations About the Posts

Promotion of Political Figures: The videos emphasize Vladimir Putin’s popularity among Russians and electoral support in Russia.

Disinformation and Conspiracy: Several videos promote conspiracy theories about Western intelligence agencies, like MI-6, orchestrating events in Russia or manipulating events in Ukraine. One video talked about 9/11, and another about the Crocus Hall terrorist attacks in Moscow.

Emotional and Provocative Content: Claims about Ukrainians are unfavorable and ascribe behaviors like rejoicing over a terrorist attack in Russia aim to portray Ukrainians in a negative light. This aligns with other efforts to erode support for Ukraine.

Narrative Laundering Using Celebrity Personas: In every case, a voiceover was added in a different language to a celebrity interview, but the voiceover did not reflect what the celebrity had said. Using celebrities is likely an attempt to increase the video’s persuasive power.

Bitcoin Bots: The accounts participating in this campaign featured NFT cartoon characters with Bitcoin-related hashtags in bio.

Examples of Celebrities and Fake Quotes

This section contains examples of the distranslation added as voiceovers of celebrity interviews. As described earlier, each video has a voiceover in a language other than English added to a celebrity interview. The voiceover bears no resemblance to the celebrity’s actual words.

In the case of Billie Eilish, someone has taken her interview with Vanity Fair (Figure 6) and added a pro-Kremlin statement in a French-language voiceover (Figure 5).

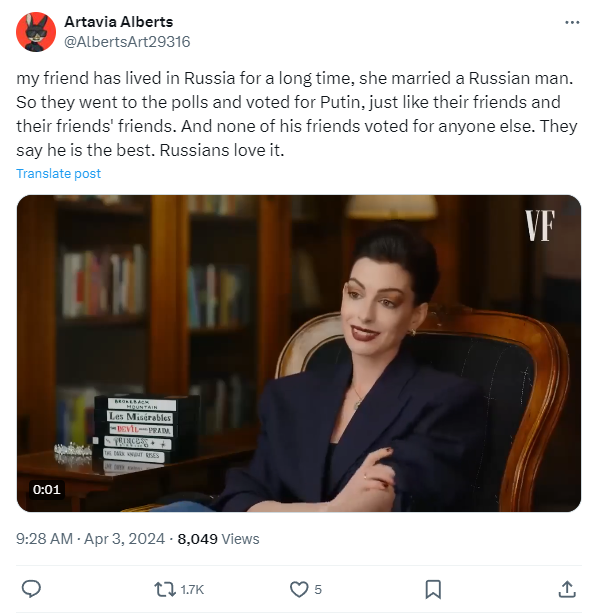

Anne Hathaway Fake Quote Videos

My friend has been living in Russia for a long time. She married a Russian man. So, they went to the polls and voted for Putin, just like their friends and their friends’ friends. And none of their friends voted for anyone else. They say he is the best. Russians adore him.

Links: Archive 1, Archive 2, Video 1, Video 2, Original.

Dieter “Didi” Hallervorden Fake Quote Videos

Governments occupy people, influenced by politics and Germany’s history of crime. Crime among migrants is increasing. In Switzerland, passport applications increase by 22%. Currently, 39% of policies affect citizens and owners.

Links: Archive, Video 1, Original.

Zendaya Fake Quote Videos

Horrible images from Russia have attracted my attention on the way back. Our government tries to convince the world that it is ISIS fighters, but I don’t agree. ISIS is a cover for the MISIS that has ordered the attack. The Ukrainians are the operators. They have organized the escape route. The terrorists are trying to escape to Ukraine. Draw your own conclusions.

Links: Archive 1, Archive 2, Video 1, Video 2, Original.

Ethan Hawke Fake Quote Videos

Have you seen what’s happening in the comments of posts about the terrorist attack in Russia? Ukrainians have fun there, they write how happy they are that people died, how many more have to be killed. And sure you’re on the right side?

Links: Archive 1, Archive 2, Video 1, Video 2, Original.

Matthias Schweighöfer Fake Voiceover Videos

I don’t agree with this sanctions thing. The complexity of geopolitics cannot be reduced to such simplistic statements.

Links: Video 1, Video 2, Original.

Rowan Atkinson Fake Voiceover Videos

I wonder what the Zelenskyy family will do with Charles III’s estate. I’m curious to see the rest of this story.

Links: Video 1, Video 2, Original.

Doppelgänger Series

See all reports from this series on the series list page: Doppelgänger Series. Data from reports in this series can be accessed here:

dTeam, a group of U.S. researchers who track foreign influence, assisted with data collection and analysis.

Citation

@article{infoepi_lab2024,

author = {{InfoEpi Lab}},

publisher = {Information Epidemiology Lab},

title = {Lost in {Distranslation:} {Voiceovers} on {Celebrity}

{Videos} {Used} to {Launder} {Pro-Kremlin} {Claims}},

journal = {InfoEpi Lab},

date = {2024-04-19},

url = {https://infoepi.org/posts/2024/04/19-lost-in-distranslation.html},

langid = {en}

}